X the independent/treatment/explanatory/predictor variable (can be controlled or manipulated);

Y the dependent/response variable.

Linear:

Scatterplot points are straight line-ish. Each unit change of X, the Y changes by a constant on average.

Direction:

x,y both go up together or both go down together: positive r.

One goes up, the other goes down: negative r.

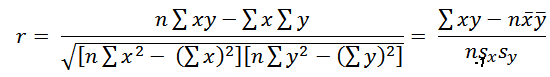

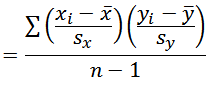

Strength: the closer |r| is to 1, the stronger the relationship/association.

Observed Y's are close to predicted-by-X's. Residuals are small.

Correlation might mean one variable causes the other but by itself is insufficient to establish causation.

r unchanged: X±c ior Y±c, aX ior bY (a,b same sign) r sign flips: aX or bY (a,b different signs) r≈0: no linear relationship but might be non-linear r is not "cause-and-effect" r measures association, not causation r can be greatly affected by outliers, sensitive to outliers r unaffected by swapping X and Y r unaffected by changing measuring units lottery 334 127 300 227 202 180 164 145 255 54 16 41 27 23 18 18 16 26 Clusters 1 1 1 2 2 2 3 3 3 10 1 2 3 1 2 3 1 2 3 10 1 1 2 2 9 9 10 10 1 2 1 2 9 10 9 10 1 8 8 8 9 9 9 10 10 10 10 1 2 3 1 2 3 1 2 3 Anscombe 10 8 13 9 11 14 6 4 12 7 5 9.14 8.14 8.74 8.77 9.26 8.1 6.13 3.1 9.13 7.26 4.74 10 8 13 9 11 14 6 4 12 7 5 7.46 6.77 12.74 7.11 7.81 8.84 6.08 5.39 8.15 6.42 5.73 small MPG 2844 3109 2870 3095 2915 2985 2563 3009 2798 2468 2598 2558 36 38 41 33 42 31 40 37 35 40 38 37 r=-.395 large MPG 3608 3962 4253 4006 3754 3859 3874 4674 4321 4346 3891 3957 32 27 25 31 28 30 30 24 27 26 33 31 tar 20 27 27 20 20 24 20 23 20 22 20 20 20 20 20 10 24 20 21 25 23 20 22 20 20 1.1 1.7 1.7 1.1 1.1 1.4 1.1 1.4 1.0 1.2 1.1 1.1 1.1 1.1 1.1 1.8 1.6 1.1 1.2 1.5 1.3 1.1 1.3 1.1 1.1 (10,1.8) outlier year(1960=1),CPI 1 13 26 35 42 43 49 53 55 59 29.6 44.4 109.6 152.4 180 184 214.5 233 237 252.2 video BP 138 130 135 140 120 125 120 130 130 144 143 140 130 150 82 91 100 100 80 90 80 80 80 98 105 85 70 100 video boats-manatees 68 68 68 70 71 73 76 81 83 84 53 38 35 49 42 60 54 67 82 78 vid says RMSE(se)=6.61 mine:6.85 they use wrong numbers for m and b... POTUS-opponent heights 177 191 169 190 196 174 179 181 171 177 168 184 195 180 173 177 183 190 169 177 179 177 182 181 194 195 190 177 181 193 184 194 quadratic? 10 8 13 9 11 14 6 4 12 7 5 9.15 8.14 8.74 8.77 9.27 8.09 6.13 3.09 9.13 7.26 4.74 test data: 35 12 65 47 21 32 52 15 57 210 160 285 255 180 220 190 170 275 34 108 64 88 99 51 5 17 11 8 14 5 uniform random 0-10 1 5 2 6 7 1 2 2 1 2 8 3 3 0 6 5 3 6 9 1 2 4 3 5 2 10 2 1 4 5 3 6 10 7 1 4 7 2 9 2 8 3 1 1 7 8 10 2 4 7 3 0 1 6 6 10 6 7 7 1 Spearman rank correlation coefficient. Rs=0.657 CV=0.738 209 353 19 201 344 132 401 126 23 31 7 12 26 5 24 4 Spearman Rs=.817 CV=.700 361 270 306 22 35 10 8 12 21 2844 1967 1371 1064 667 241 188 154 125 1 1 1 1 2 2 2 2 3 3 3 3 4 4 4 4 5 5 5 5 6 6 6 6 7 7 7 7 8 8 8 8 1 2 3 4 5 6 7 8 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 r=.3 102 97 107 69 118 90 100 116 81 113 94 100 104 91 93 104 107 102 93 79 89 109 95 100 119 116 103 108 106 78 93 98 126 104 102 102 103 92 105 88 109 97 99 99 111 119 82 80 96 85 116 97 98 106 96 95 101 100 102 99 82 91 101 84 99 101 111 98 118 107 124 111 118 98 102 96 100 93 102 108 112 110 115 87 113 94 102 111 99 79 104 85 90 103 89 93 90 102 79 114 9.5 11.0 11.0 8.4 11.0 10.0 10.7 11.0 9.6 10.0 8.6 8.1 9.9 10.6 8.8 10.4 10.0 10.4 9.2 8.9 9.9 9.1 9.2 11.0 10.3 10.2 9.4 9.8 9.9 10.0 8.5 10.8 10.8 11.7 10.8 9.3 11.5 9.8 8.9 9.3 10.1 9.5 9.7 11.9 9.4 10.7 8.6 9.2 9.3 10.8 8.9 10.4 9.9 11.3 8.9 9.3 10.7 10.0 10.9 11.9 10.3 10.2 9.6 10.4 11.1 10.2 11.1 7.7 9.6 8.9 11.2 9.1 8.3 10.1 9.3 9.6 9.4 10.8 11.4 9.8 10.3 9.3 10.3 9.2 10.9 9.8 11.2 12.1 10.3 8.4 9.9 8.3 10.4 9.5 9.7 9.8 11.7 11.1 9.9 11.3 r=.5 88 108 101 109 95 107 90 92 105 86 105 102 97 99 89 110 100 101 87 112 108 108 99 91 73 101 104 104 102 91 96 94 99 113 110 87 98 100 101 101 106 121 106 113 106 83 90 100 83 119 83 101 109 96 92 111 105 91 99 90 107 109 89 101 123 103 89 95 94 93 85 113 107 89 117 93 99 99 100 100 91 101 98 93 81 139 88 115 102 100 112 104 114 100 113 106 89 101 82 100 11.0 10.0 9.6 11.4 9.5 10.6 9.9 9.5 10.4 9.6 9.8 9.6 9.2 10.5 8.6 10.2 10.8 10.1 9.0 12.2 10.5 10.1 9.7 9.5 7.7 10.5 11.3 10.2 10.2 9.2 11.3 11.0 8.8 10.9 9.6 9.7 10.0 9.5 9.6 10.5 8.4 11.8 10.7 10.5 10.2 8.9 9.5 10.5 7.8 11.2 10.1 9.7 10.6 10.7 9.0 11.6 9.7 11.1 10.2 9.6 10.7 11.0 8.3 9.8 11.0 10.5 9.8 9.0 10.4 9.2 10.0 12.2 9.8 9.0 10.0 9.6 9.9 8.4 8.4 10.1 10.0 9.8 10.7 10.6 8.9 10.6 10.6 10.5 9.5 9.8 10.0 10.3 9.7 10.1 11.4 10.3 10.3 10.1 8.5 8.3 r=.7 95 103 107 105 104 89 99 96 106 99 97 94 89 99 111 100 120 107 106 103 98 107 109 96 108 99 114 95 90 100 85 108 108 113 104 106 98 114 118 104 108 97 96 109 97 101 90 111 116 86 100 93 98 96 92 92 78 89 82 111 96 84 100 103 102 110 92 100 91 100 93 107 100 95 114 83 102 103 117 86 110 102 104 70 82 116 100 93 93 87 115 110 91 104 110 95 101 90 102 104 8.3 11.5 10.3 10.2 10.3 9.0 9.8 8.3 10.7 10.1 11.0 9.4 9.8 10.2 11.0 9.2 11.2 10.3 10.5 9.5 11.1 12.2 11.3 10.1 10.5 9.2 10.0 8.8 9.1 9.9 8.3 10.9 9.7 11.9 10.0 10.3 9.2 11.5 12.2 10.7 10.1 9.3 9.4 11.3 9.5 10.7 9.4 11.2 12.8 9.9 7.9 8.0 10.4 9.6 10.3 9.1 9.7 6.8 8.8 9.7 10.8 9.0 10.0 9.4 11.2 10.8 10.2 9.2 8.4 9.5 10.0 11.3 10.1 9.4 10.8 8.6 9.3 11.0 10.1 9.8 11.4 9.6 11.0 7.0 7.8 12.3 8.2 9.6 10.3 9.1 12.2 10.2 10.1 10.1 10.7 9.5 10.4 10.2 9.5 10.6